Physical AI – Digital intelligence, physical results

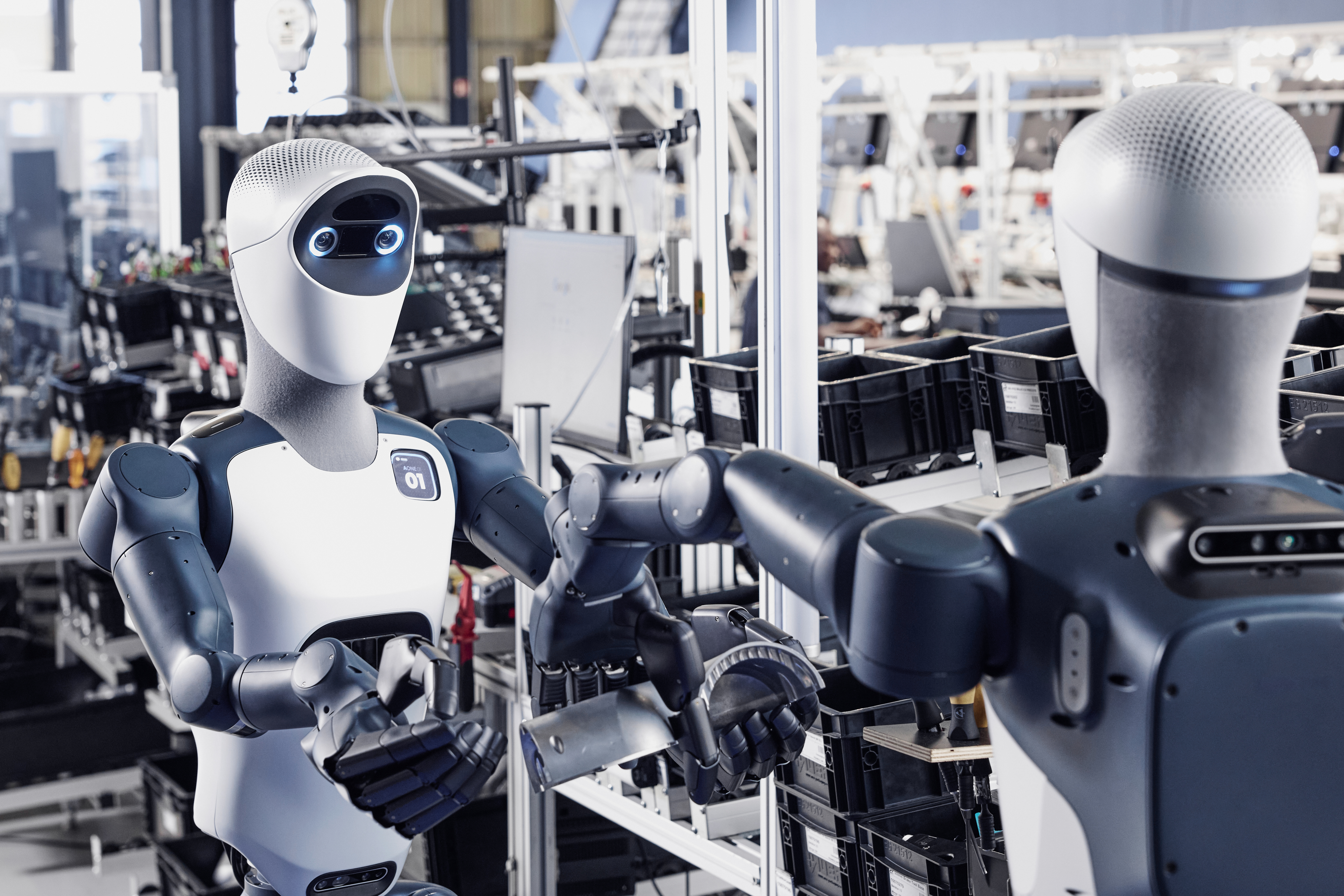

/ 4 min readAI is transforming the way we work, making processes smarter, more accessible, and increasingly autonomous. Static systems are now evolving into adaptive, responsive solutions. At Agile Robots, we give AI a body, combining sophisticated algorithms with state-of-the-art robotics to bring artificial intelligence into the physical world - and unlock real impact on the factory floor.

What is Physical AI?

Physical AI allows machines to sense, understand, and interact with the physical world. It bridges the gap between the digital world and robotics, bringing AI into tangible environments. Hardware gains unprecedented intelligence and adaptability - systems don’t just process data; they can act on it.

How does Physical AI work?

Seeing, hearing, touching - these are the most important senses of the human body. They help us perceiving our environment, make decisions, and act purposefully.

Physical AI follows the same principles: machines perceive their surroundings, interpret the information, and translate it into precise movements - much like the human body.

Thanks to cutting-edge sensors, machines can deliberately replicate human senses. Cameras serve as eyes, microphones as ears, and tactile sensors as touch, capturing high-resolution data in real time and transmitting it to connected AI systems.

Advanced signal processing, neural networks, and sensor data fusion create an accurate understanding of the environment. The AI then processes and interprets this information, transforming it into precise, targeted actions.

Interpretation by generative AI

This interpretation is carried out by generative models - such as GPT from the LLM domain or Robotics Foundation Models - that can process multimodal data like images, audio, or sensor inputs simultaneously, to generate robot movements.

GPT (Generative Pre-trained Transformer) was originally developed for language understanding and generation. In Physical AI systems, such a model can also be used for the semantic interpretation of sensory information: it recognizes patterns, draws conclusions, and generates action plans - similar to how the human brain combines language and perception to make decisions.

Our Robotics Foundation Models go a step further. They are specifically designed to process multimodal inputs - such as camera images, voice commands, or tactile measurements - together. From this, they can break down complex tasks, derive motion sequences and interactions in the physical world. This enables robots to perform complex tasks in unstructured environments autonomously, such as sorting goods in warehouses, grasping objects, manipulating them, or responding to spontaneous instructions while the robots are performing.

Overall, generative AI models form the cognitive-control core of modern Physical AI systems. They allow machines not only to process data but to understand meaning and context - and to translate this understanding into adaptive, physical behavior.

Through methods like reinforcement learning and imitation learning, where the AI gradually learns optimal strategies by interacting with its environment, these systems continuously improve. Combined with sensor fusion, multimodal representation, and generative learning, this creates a new form of embodied intelligence that increasingly blurs the line between the digital and physical worlds.

How Agile Robots uses Physical AI

At Agile Robots, we take a holistic approach to automation: We look at processes as a whole, automate entire production lines, and deliberately leverage the interplay of cutting-edge technologies to achieve optimal results.

This interplay depends on the seamless interaction of hardware, software, and artificial intelligence. For us, this means that our robots, peripheral devices, and the AgileCore software platform - supported by Agile AI - together with the Robotics Foundation Models, form an intelligent, integrated system.

Sensors perceive the world, Agile AI and the models interpret it - and our robots translate it into precise, sensitive movements.

Versatile applications

Physical AI opens up almost limitless applications in industry. Wherever precision, flexibility, and intelligent action are required, it creates new opportunities for automation and efficiency.

For example, at automatica 2025, we demonstrated how Physical AI can be used in server maintenance. On-site, visitors had the chance to instruct our mobile dual-arm robot using natural language. Agile AI then took care of the rest.

After receiving the command, the robot executed the task with precision. It autonomously moved to the server rack, identified the hard drive slots, and carefully removed the existing drives with tactile sensitivity. It then placed the appropriate drives into the empty slots.

This process is almost entirely autonomous: Physical AI coordinates perception, decision-making, and action, allowing the robot to respond independently to different situations. Minimal human intervention is required - primarily to monitor the processor and manage rare corner cases.

Benefits for Industry

The advantages are clear: Physical AI increases efficiency, reduces errors, and allows flexible adaptation to changing production conditions. Processes that were once time-consuming and prone to mistakes are now carried out reliably and precisely.

At the same time, the technology lowers the barriers to complex automation solutions, as users do not need deep programming knowledge. Instead, processes can be initiated simply through natural language input.

The technology also enables continuous process optimization, as machines learn independently from their actions and make workflows increasingly efficient. Sensor data is analyzed in real time, processes are monitored, and patterns are identified that indicate potential improvements.

Physical AI thus opens up new possibilities for innovative automation solutions - from assembly and quality control to the maintenance of complex systems. Agile Robots combines productivity, precision, and adaptability in unprecedented ways, actively shaping the future of intelligent production.